Cyber Security News Aggregator

.Cyber Tzar

provide acyber security risk management

platform; including automated penetration tests and risk assesments culminating in a "cyber risk score" out of 1,000, just like a credit score.Downloading Pwned Passwords Hashes with the HIBP Downloader

published on 2022-05-19 22:34:54 UTC by Troy HuntContent:

Just before Christmas, the promise to launch a fully open source Pwned Passwords fed with a firehose of fresh data from the FBI and NCA finally came true. We pushed out the code, published the blog post, dusted ourselves off and that was that. Kind of - there was just one thing remaining...

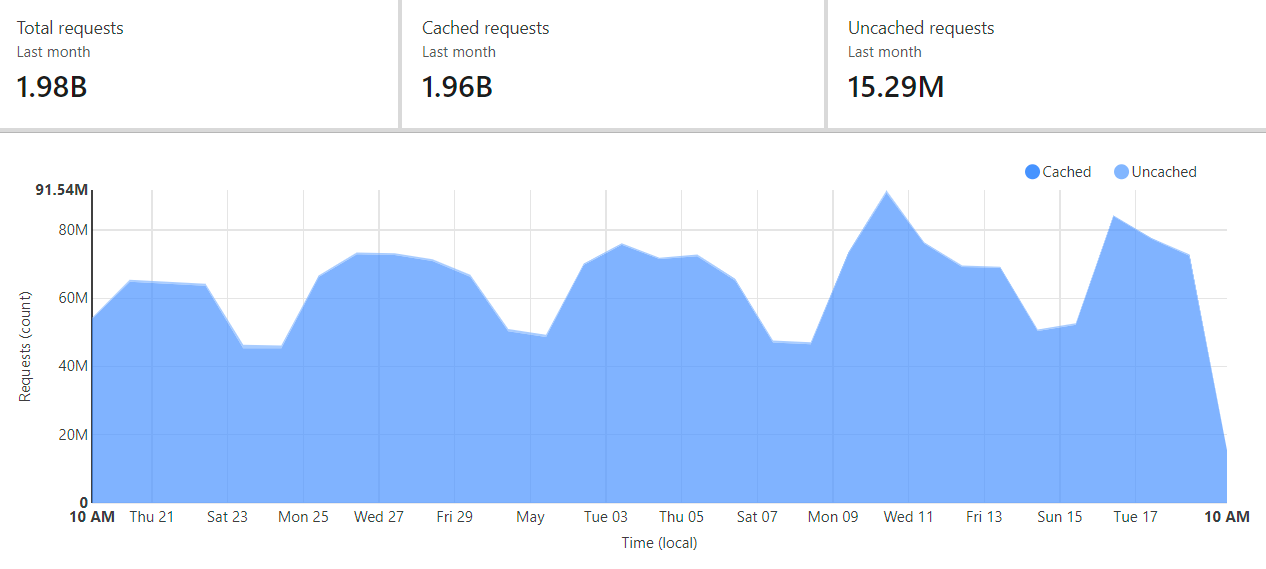

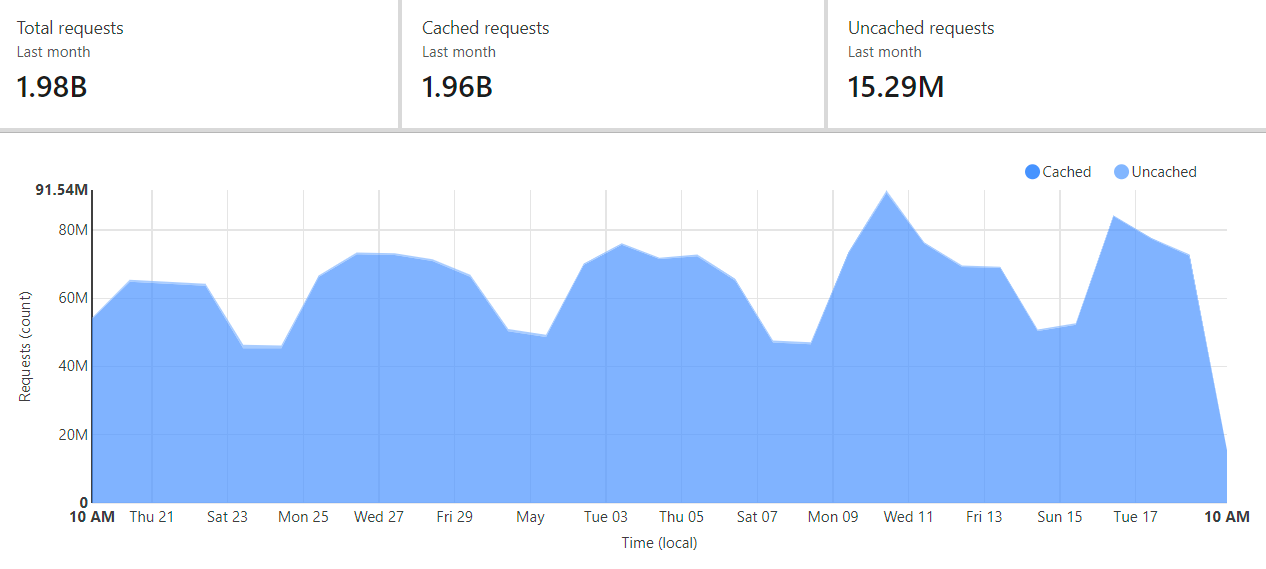

The k-anonymity API is lovely and that's not just me saying that, that's people voting with their feet:

That's already 58% by volume from my December blog post, only 5 months ago to the day. It's also just a rounding error off a 100% cache hit ratio too 😎 But the bit that remained was the promise I made in that last blog post:

Lastly, as of right now, the code to take the ingestion pipeline and dump all passwords into a downloadable corpus is yet to be written. We want to do this - we have every intention of doing this - but given how long it frequently was between releases, we don't feel the need to rush.

The idea of taking 16^5 hash ranges, bundling them all up into a single monolithic archive then making it all downloadable seemed a non-trivial task. Plus, I was still licking my wounds from the massive costs I got hit with after releasing the last archives and them exceeding the cacheable limit at the time on Cloudflare's edge. And that's when it hit me - why don't we just write a script to download all the hashes from the same k-anonymity API so many organisations are already using? It's just 16^5 separate requests and the responses could be dumped into a big text file, how hard could it be? It'd almost all be cached and there's super efficient brotli compression between the client and the Cloudflare edge so it should be fast too, so... why not?

I threw the idea over to Stefán and in his typically cool Icelandic way he not only built the feature, but did it much better than I was thinking in the first place. So, here's how it works in point form:

- There's a public repository for the Pwned Passwords Downloader over on Github where you're welcome to grab the code, submit PRs or raise issues

- There's also a NuGet package so if you don't want to download and compile code yourself, you can pull the executable directly via the command line

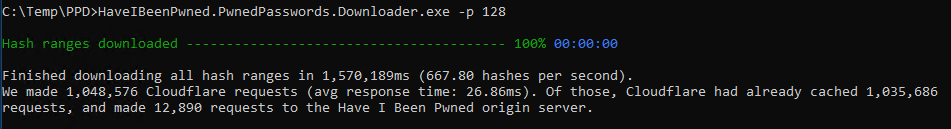

And that's it. Run it up and it looks like this:

The -p switch defines the level of parallelism to apply and when run in the Azure VM I tested this from, it took 26 minutes to pull everything down. Obviously YMMV based on connection speed, but with that massive cache hit ratio (also reflected in the output above), at least you'll be retrieving almost every single hash range from a location very close to you.

I'm conscious the one remaining gap we have is that this doesn't make the NTLM versions downloadable and there are folks out there eagerly awaiting that. I suspect we'll take a similar approach there so stay tuned for that, it shouldn't be a biggy now we've established a pattern. I'm also conscious that to make this tool more useful, it would be handy to know when to actually run it by seeing how many new password hashes have been added since a given date. That's on the list - we know it's wanted - and especially as the volume of inbound passwords ramp up I know it'll be super useful for people.

So, go forth and grab the tool, pull down the hashes whenever you feel like it and do good things with them. Now I'm kinda curious to see what those API hit numbers look like once the masses grab this tool and make 1M+ requests each 😊

https://www.troyhunt.com/downloading-pwned-passwords-hashes-with-the-hibp-downloader/

Published: 2022 05 19 22:34:54

Received: 2022 06 26 12:09:13

Feed: Troy Hunt's Blog

Source: Troy Hunt's Blog

Category: Cyber Security

Topic: Cyber Security

Views: 14