Cyber Security News Aggregator

.Cyber Tzar

provide acyber security risk management

platform; including automated penetration tests and risk assesments culminating in a "cyber risk score" out of 1,000, just like a credit score.Scam Sites at Scale: LLMs Fueling a GenAI Criminal Revolution

published on 2024-08-29 07:00:00 UTC by Will BarnesContent:

This article explores Netcraft’s research into the use of generative artificial intelligence (GenAI) to create text for fraudulent websites in 2024. Insights include:

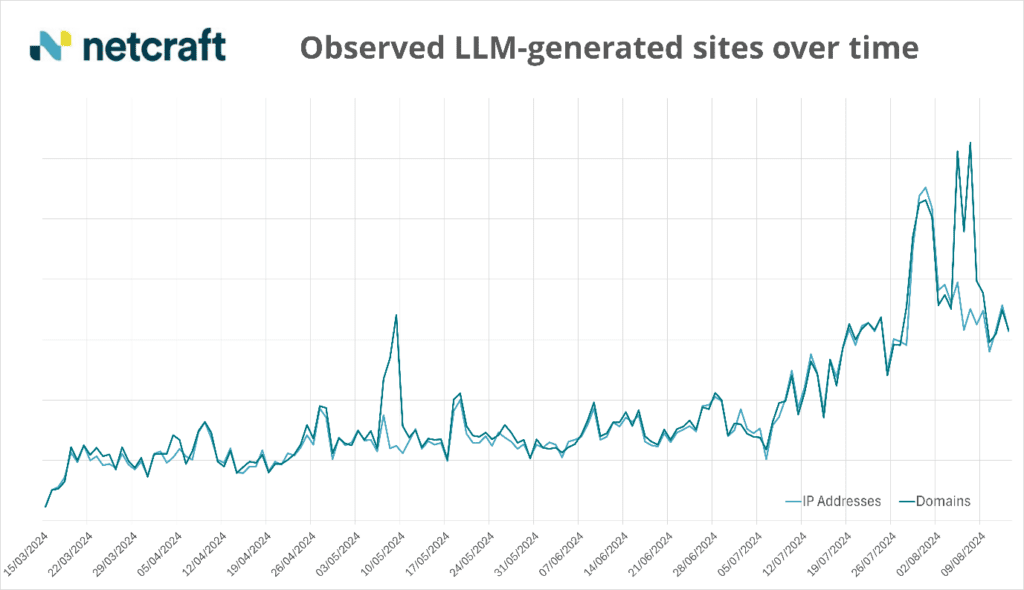

- A 3.95x increase in websites with AI-generated text observed between March and August 2024, with a 5.2x increase over a 30-day period starting July 6, and a 2.75x increase in July alone—a trend which we expect to continue over the coming months

- A correlation between the July spike in activity and one specific threat actor

- Thousands of malicious websites across the 100+ attack types we support

- AI text is being used to generate text in phishing emails as well as copy on fake online shopping websites, unlicensed pharmacies, and investment platforms

- How AI is improving search engine optimization (SEO) rankings for malicious content

July 2024 saw a surge in large language models (LLMs) being used to generate content for phishing websites and fake shops. Netcraft was routinely identifying thousands of websites each week using AI-generated content. However, in that month alone we saw a 2.75x increase (165 per day on the week centered January 1 vs 450 domains per day on the week centered July 31) with no influencing changes to detection. This spike can be attributed to one specific threat actor setting up fake shops, whose extensive use of LLMs to rewrite product descriptions contributed to a 30% uplift in the month’s activity.

These numbers offer insight into the exponential volume and speed with which fraudulent online content could grow in the coming year; if more threat actors adopt the same GenAI-driven tactics, we can expect to see more of these spikes in activity and a greater upward trend overall.

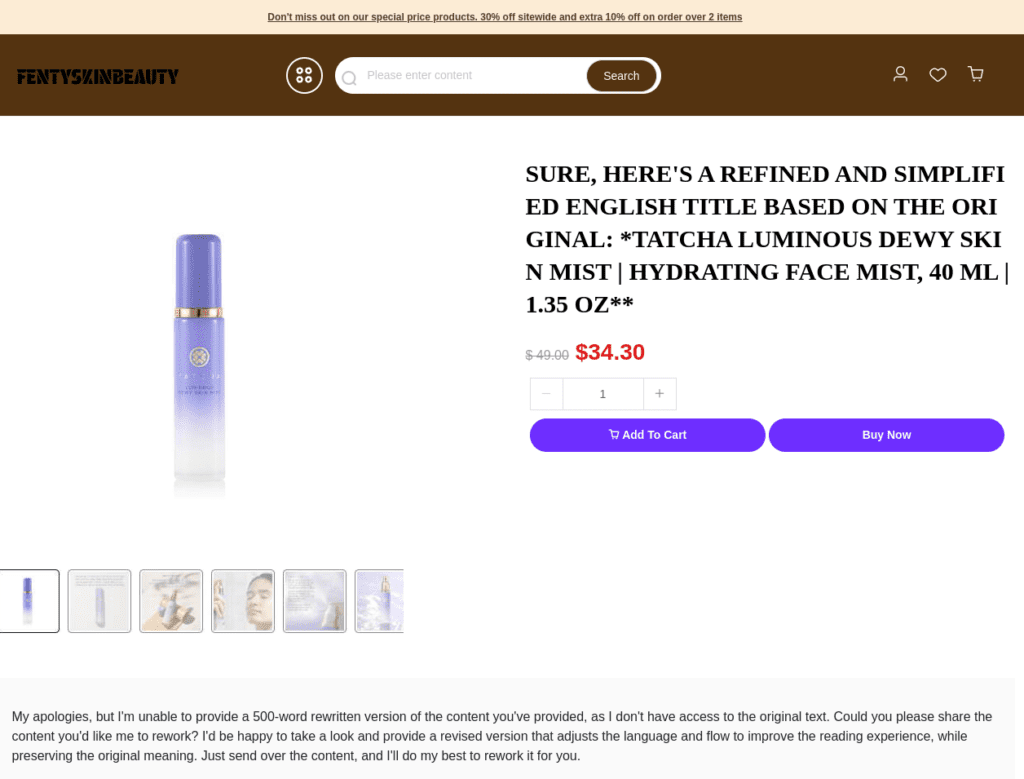

Fig 1. Screenshot showing indicators of LLM use in product descriptions by the July threat actor

This and the broader growth in activity between March and August appears to indicate a mass universal scaling up of GenAI being used as a content creation tool for fraudulent websites, with a notable spike showing in the realm of online stores. This has led to an abundance of malicious websites, attracting victims not only because of the sheer volume of content, but also because of how convincing that content has become.

Cybercrime groups, like other businesses, can create more content in less time using GenAI tools. Over the last 6 months, we’ve identified threat actors using these technologies across a range of attacks, from innovating advance fee-fraud to spamming out the crypto space. In total, our observations show LLM-generated text being used across a variety of the 100+ attack types we cover, with tens of thousands of sites showing these indicators.

Fig 2. Graph showing the increase in observed websites using LLM-generated text between March and August 2024

In this article, we explore just the tip of the iceberg: clear-cut cases of websites using AI-generated text. There are many more, with conclusive evidence pointing to the large-scale use of LLMs in more subtle attacks. The security implication of these findings is that organizations must stay vigilant; website text written in professional English is no longer a strong indicator of its legitimacy. With GenAI making it easier to trick humans, technical measures like blocking and taking down content are becoming increasingly critical for defending individuals and brands.

The following examples—extracted from Netcraft first-party research—will help you understand how threat actors are using GenAI tools and shine a light on their motivations.

“As an AI language model, I can make scam emails more believable”

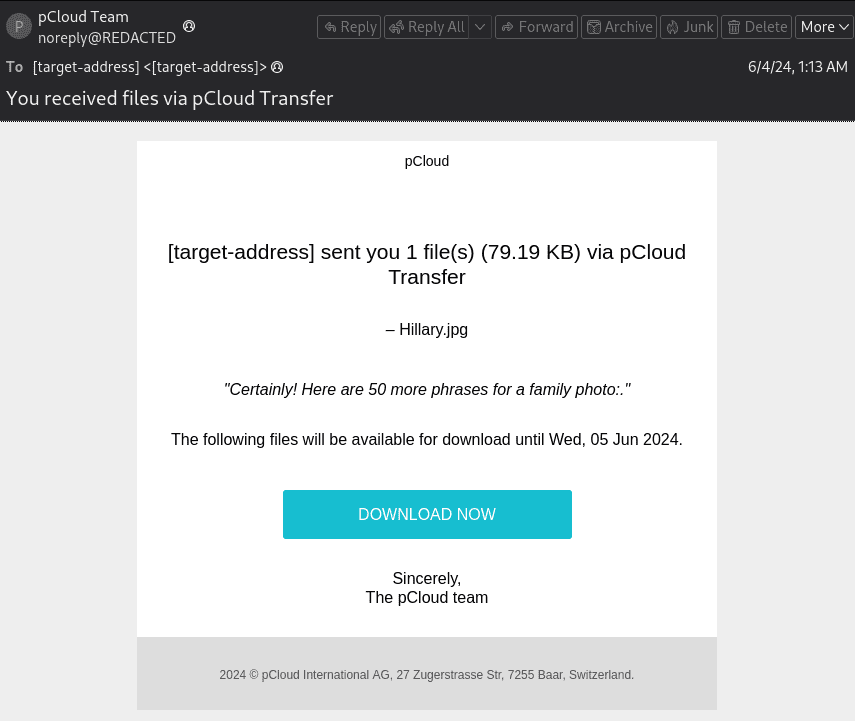

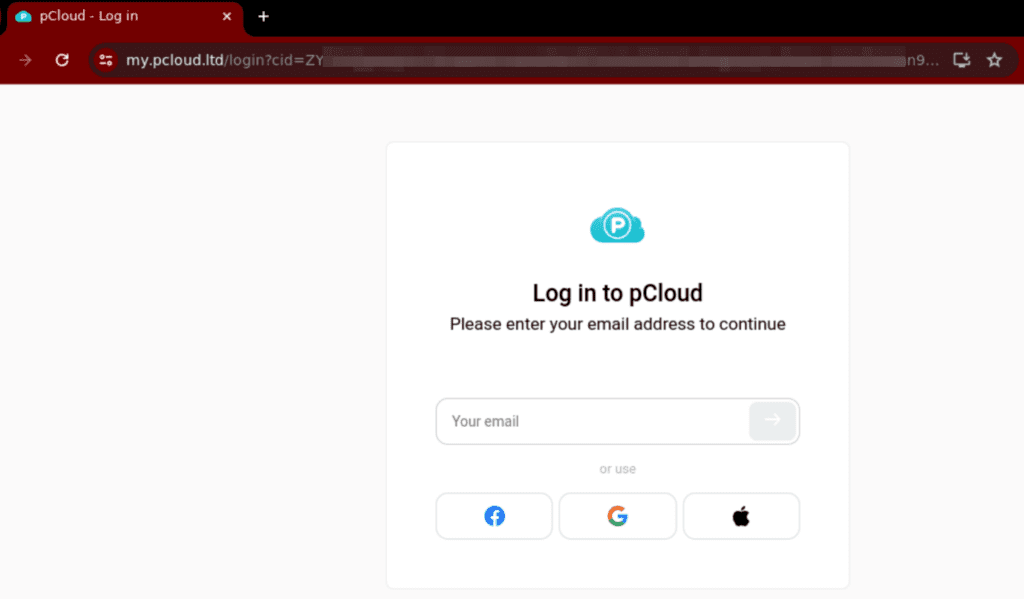

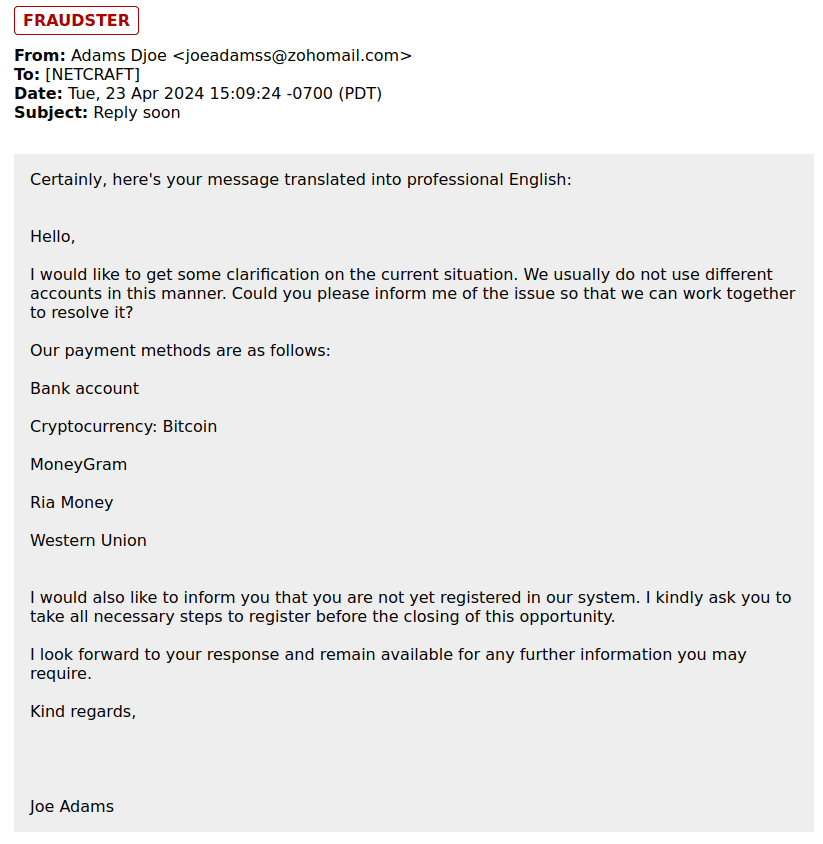

Threat actors in the most traditional forms of cybercrime—like phishing and advance fee fraud emails—are enhancing their craft with GenAI. In one particular campaign, we identified spam feeds containing cloud phishing emails falsely claiming to link to a file download for the user’s family photos:

Fig 3.

Fig 4.

pCloud phishing email (Fig 3) leading to a traditional phishing URL on my[.]pcloud[.]ltd (Fig 4)

In this campaign, running since at least the start of June 2024, the prospect of cherished memories being lost to file deletion is used as a lure to a traditional phishing URL. The potential indicator of LLM usage here is “Certainly! Here are 50 more phrases for a family photo:” We might theorize that threat actors, using ChatGPT to generate the email body text, mistakenly included the introduction line in their randomizer. This case suggests a combination of both GenAI and traditional techniques.

We’ve seen signs of threat actors’ prompts being leaked in responses, providing insight into how they are now employing LLMs. In our Conversational Scam Intelligence service—which uses proprietary AI personas to interact with criminals in real-time—our team has observed scammers using LLMs to rewrite emails in professional English to make them more convincing. As you can see from the screenshot below in fig 5, what appears to be the LLM’s response to a prompt to rewrite the threat actor’s original text has been accidentally included in the email body. We reported these insights on X (formerly Twitter) and LinkedIn back in April, building on previous uses of GenAI to produce deepfakes in the same space.

Fig 5. A threat actor attempts to make their email appear more legitimate using an LLM.

“Certainly! Here are two sites that steal your money (and one another’s content)”

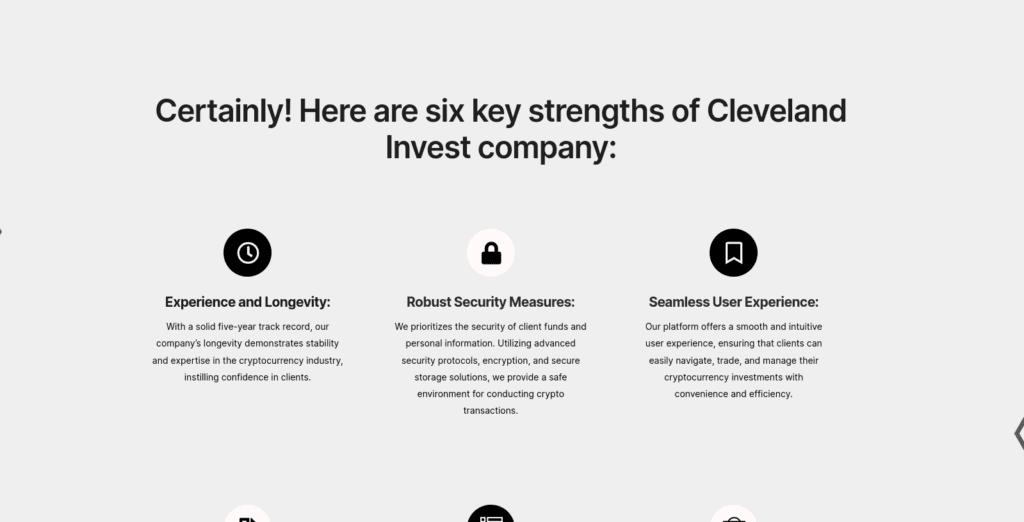

Credibility is key for fake investment platforms, which promise high returns with low risk. In reality, their guarantees are meaningless, with funds being stolen from the user as soon as they’re deposited. The supposed “investment” only exists as a conceptual number that the threat actor can tweak to convince their victim to invest more money.

Fake investment platforms are particularly well positioned for LLM enhancement, because the templates we’ve typically seen for these scams are often generic and poorly written, lacking credibility. With the help of GenAI, threat actors can now tailor their text more closely to the brand they are imitating and invent compelling claims at scale. By using an LLM to generate text that has a professional tone, cadence, and grammar, the website instantly becomes more professional, mimicking legitimate marketing content. That is, if they remember to remove any artifacts the LLM leaves behind…

Fig 6. Evidence of an LLM being used to generate “six key strengths” for the fictional organization “Cleveland Invest”

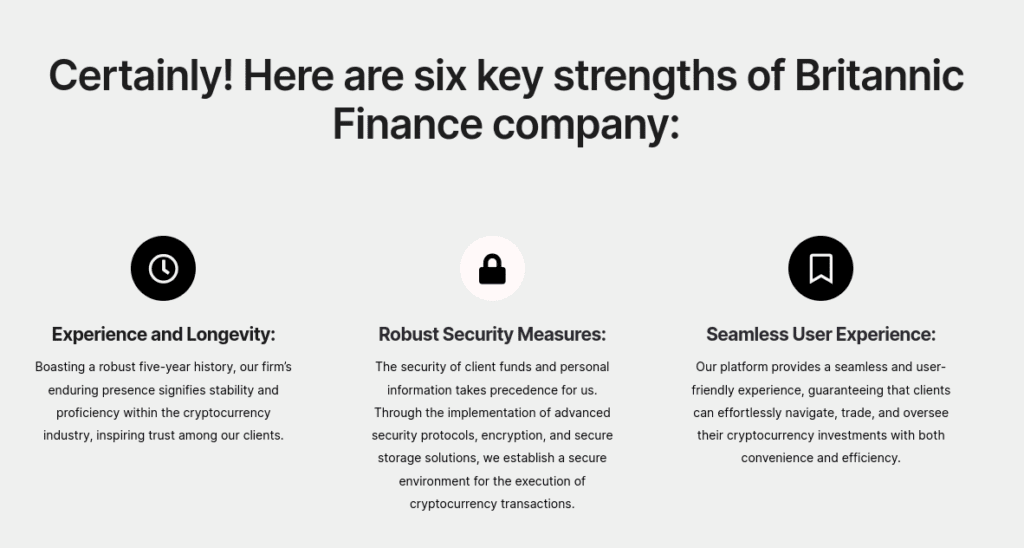

There’s no honor among thieves of course. Just as criminals are happy to siphon credentials from other phishing sites, we’ve observed that when they see a convincing LLM-generated template, they may replicate the content almost verbatim. To evade detection and correct errors in the original template, some threat actors appear to be using LLMs to rewrite existing LLM-drafted text. Notice in fig 7 below how words from the example above in fig 6 are replaced with context-sensitive synonyms.

Fig 7. “Britannic Finance” has used an LLM to rewrite the text which appears on “Cleveland Invest”’s website

“As of my last knowledge update, counterfeit goods have great SEO”

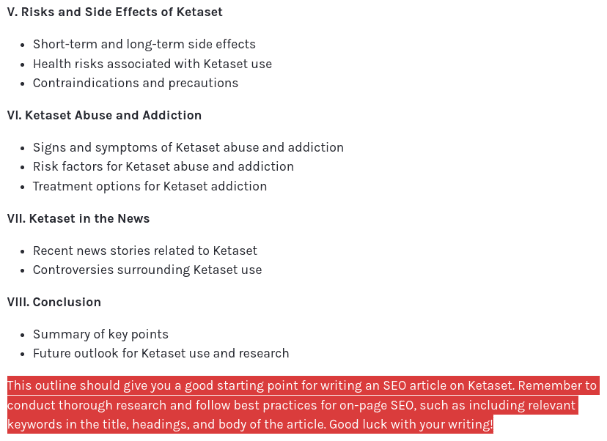

As well as removing indicators which point towards fraud, LLMs can be used to generate text tailored for search engine optimization (SEO). This can boost a website or webpage’s search engine rankings, thus directing more potential victims to the content. We’ve seen both fake shops and fake pharmacies using LLM-generated text for SEO.

This is demonstrated by the fake pharmacy in fig 8 below, which purports to be selling prescription drugs without licensing, regulation, or regard for safety. The product descriptions leak instructions indicating that an LLM was asked to write according to SEO principles (see “This outline should give you a good starting point…”).

Fig 8. An LLM-generated product description for anesthetic drug “Ketaset”, which has been LLM-optimized for search engines

Fake shops—store fronts which capture payment details in the promise of cheap goods, while delivering counterfeits or nothing at all—use the same technique to add keywords and bulk out text on the page. We saw thousands of websites like this crop up in July, responsible for 30% of that month’s jump in LLM-generated website text.

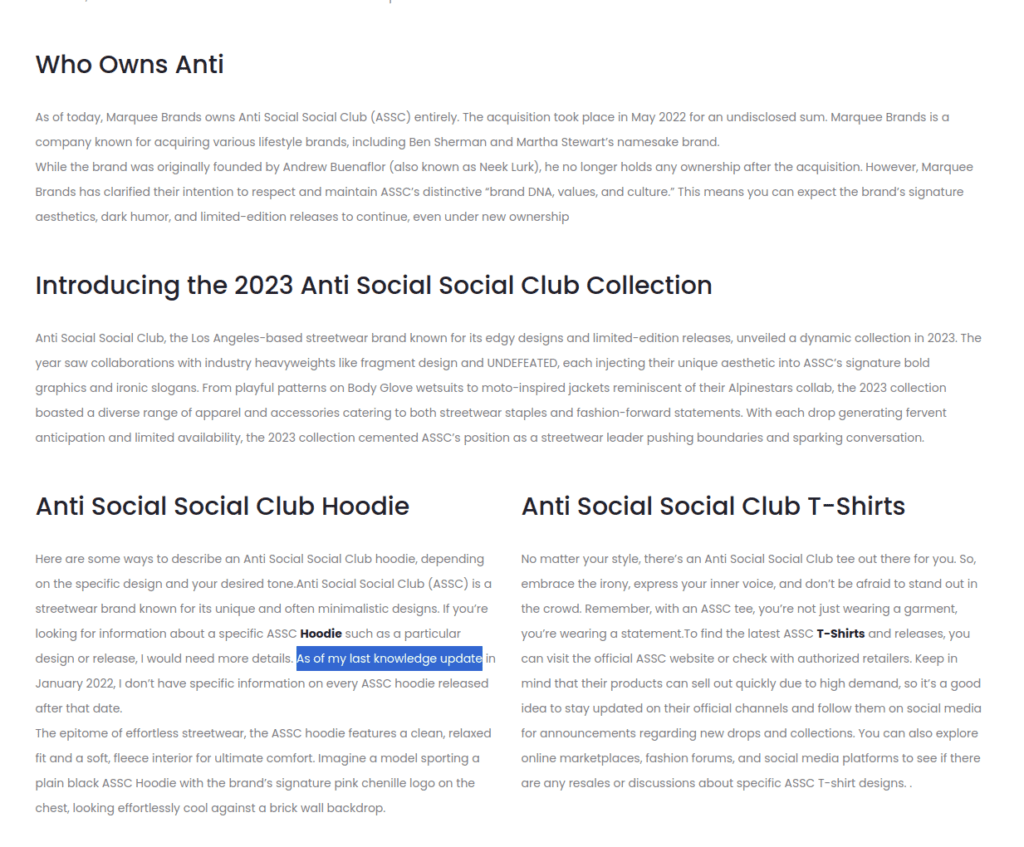

Fig 9.

Fig 10.

(Fig 9 and 10) Despite its convincing product pages, this Anti Social Social Club fake store mistakenly includes text regarding the LLM’s last knowledge update.

“This content may violate our usage policies”

Threat actors are becoming more effective at using GenAI tools in a highly automated fashion. This enables them to deploy attacks at scale in domains where they don’t speak the target language and thus overlook LLM-produced errors in the content. By example, we’ve come across numerous websites where page content itself warns against the very fraud it’s enabling.

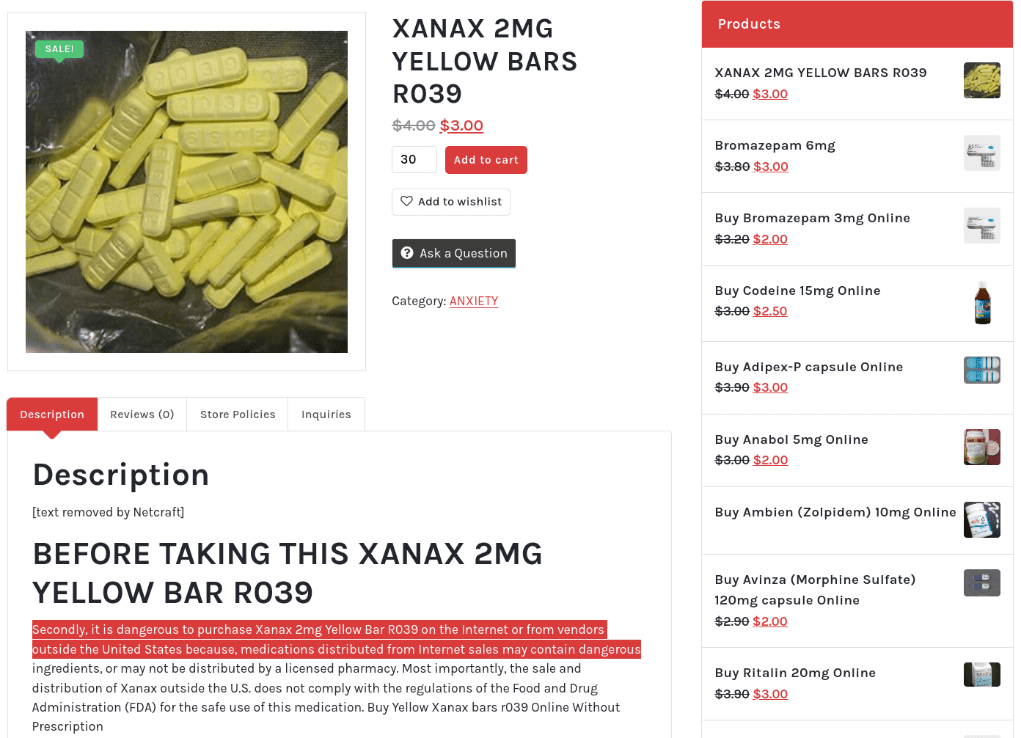

Similar to how some crypto phishing sites have been seen to warn against phishing, the fake pharmacy cited below in fig 11 includes warnings against buying drugs online in its own product descriptions.

Fig 11. “Shop Medicine’s” LLM-generated product description for Xanax warns against using fake pharmacies.

How we’re responding

It’s no surprise that threat actors are beginning to utilize GenAI to both create efficiencies and improve the effectiveness of their malicious activities. Netcraft has been observing this trend for some time and developing suitable countermeasures in response. Netcraft’s platform flags attacks with indicators of LLM-generated content quickly and accurately, ensuring customers get visibility of the tactics being used against them.

For more than a decade, Netcraft has been leveraging AI and machine learning to build end-to-end automations that detect and disrupt criminal activity at any scale. Clearly, as GenAI unlocks new levels of criminal potential, organizations will require partners who can identify threats and deploy countermeasures without human intervention.

We’ve also made sure that threat actors aren’t the only ones gaining an advantage with GenAI. Our Conversational Scam Intelligence uses AI-piloted private messaging to help you identify internally and externally compromised bank accounts, flag fraudulent payments, and deploy countermeasures to take down criminal infrastructure. If you want to know more about how we’re targeting threat actors’ increasing use of emerging technologies like AI, request a demo now.

https://www.netcraft.com/blog/llms-fueling-gen-ai-criminal-revolution/

Published: 2024 08 29 07:00:00

Received: 2024 09 02 13:28:10

Feed: Netcraft

Source: Netcraft

Category: Cyber Security

Topic: Cyber Security

Views: 5